The AI Agent Tsunami Is Coming - And The Infrastructure Isn't Ready

Picture this: millions of AI agents, each executing hundreds of transactions per day, all hitting blockchain infrastructure simultaneously. This isn't science fiction—it's happening now. The convergence of AI and blockchain is creating an unprecedented surge in automated on-chain activity. Including pre-confirmations, the latest advance in block processing that significantly increases transaction speed and finality - creates the perfect storm. We're about to see transaction volumes that make today's peak loads look quaint. However, there is a major issue that isn't receiving much attention: the biggest challenge for every crypto project is unreliable RPC.

And it's about to get exponentially worse.

When Agents Meet Reality

AI agents don't tolerate infrastructure failures the way humans do. When your RPC endpoint goes down, a human might retry in a few minutes. An AI agent? It's already failed its task, potentially cascading failures across dependent systems.

This challenge is most acute for protocols that bridge on-chain and off-chain computing - particularly DEXs. These systems require seamless coordination between blockchain state and external compute resources.

Once AI agents begin handling market-making, arbitrage, and executing intricate DeFi strategies on a large scale, a single second of downtime can lead to failed transactions and missed opportunities.

The Node Operators' Nightmare

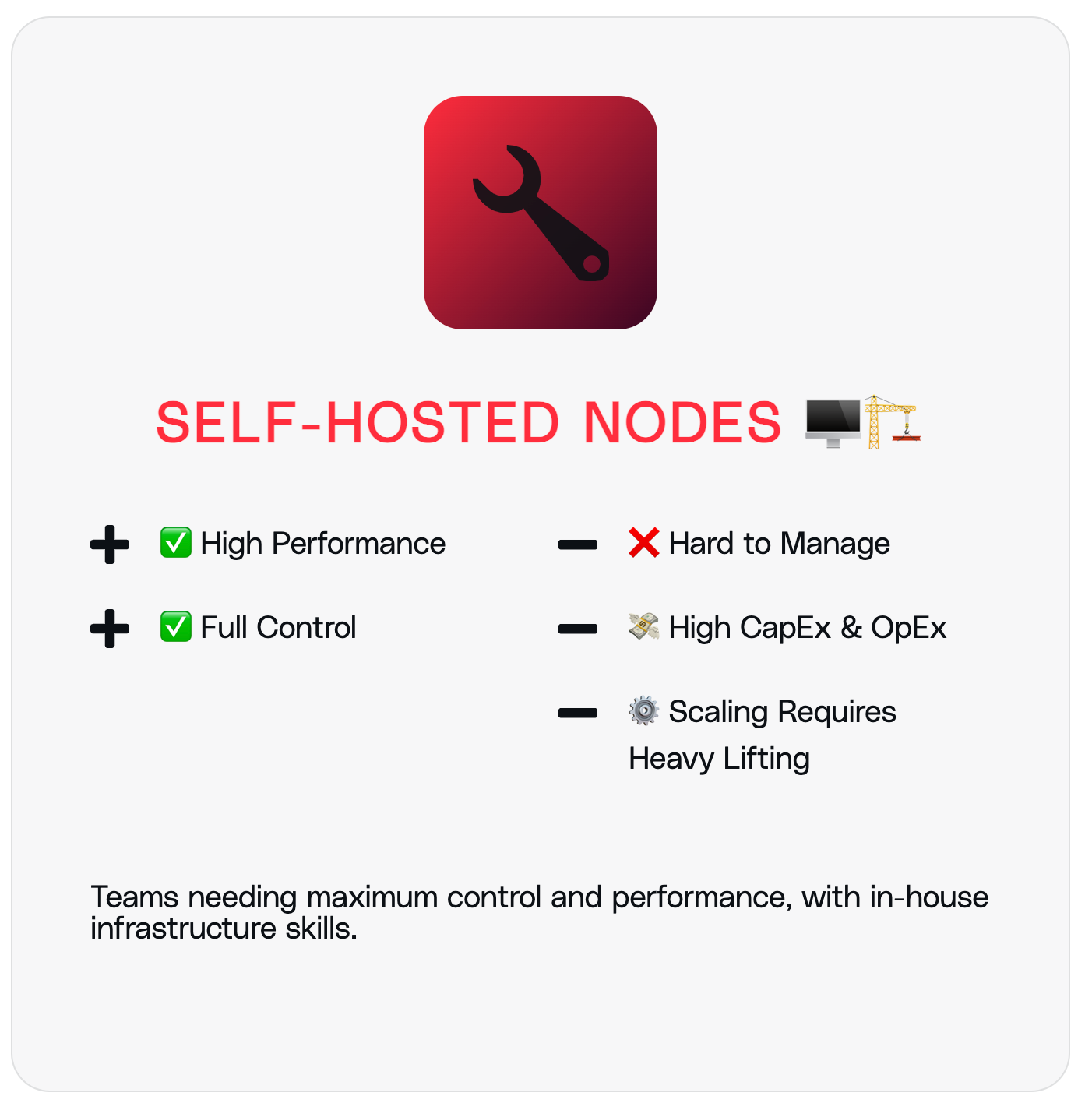

Having run high-performance indexers across Ethereum and Move-based chains taught us the hard truth about reliable RPC infrastructure. The operational complexity is staggering:

- Archive nodes are difficult to sync

- Snapshots are the only viable solution

- Real-time propagation is fragile

- Maintenance overhead never stops

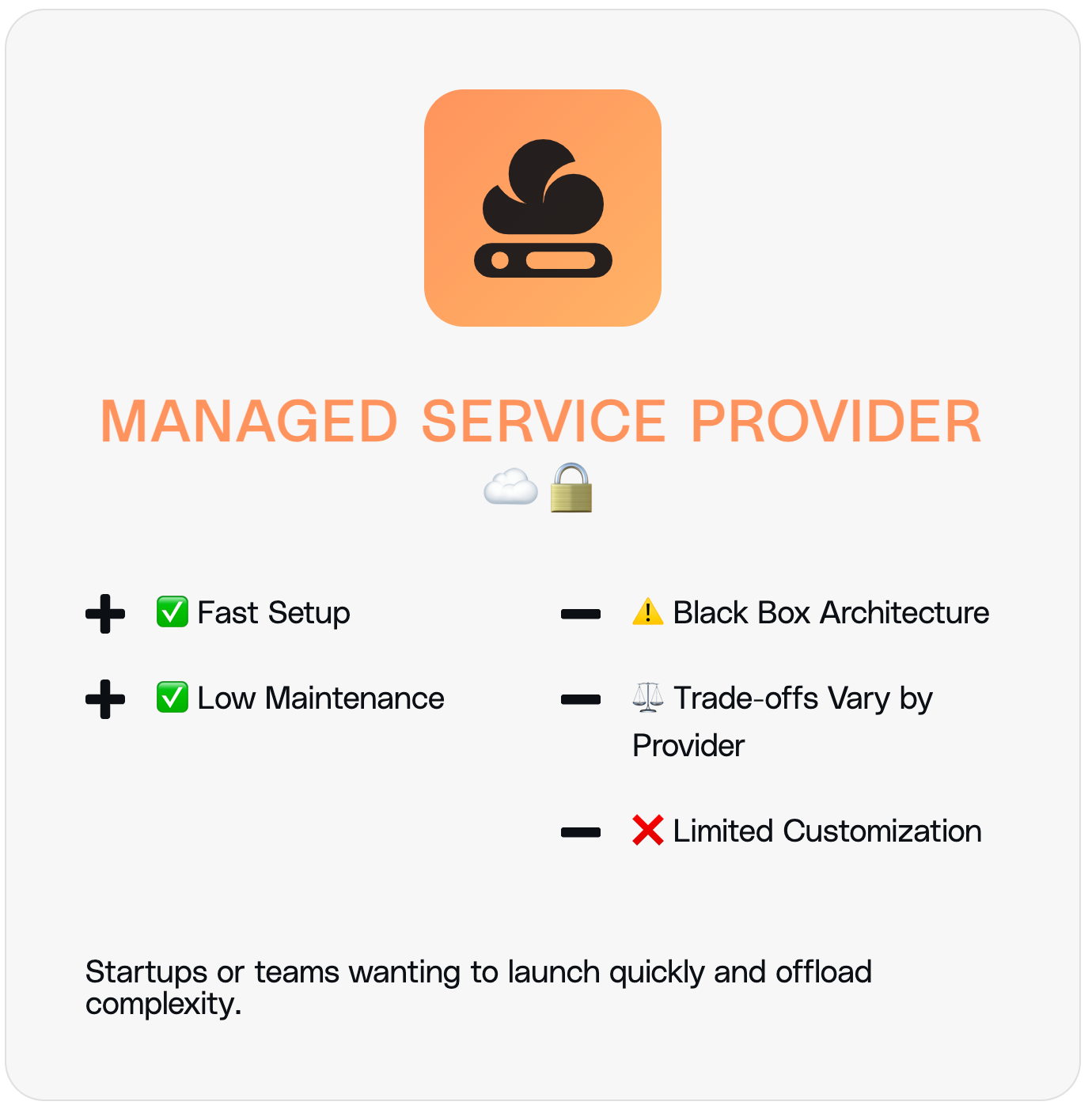

This operational complexity pushes most teams toward managed RPC services. But this creates a new problem.

The Fragmented Provider Ecosystem

The current RPC provider landscape forces developers into an uncomfortable compromise. Different providers excel at different use cases:

- Some optimise for historical data queries

- Others prioritise real-time block propagation

- Few handle both effectively

This fragmentation means dApps must integrate multiple vendors, manage different APIs, and constantly balance between providers. There's no comprehensive solution accessible to most builders in the space.

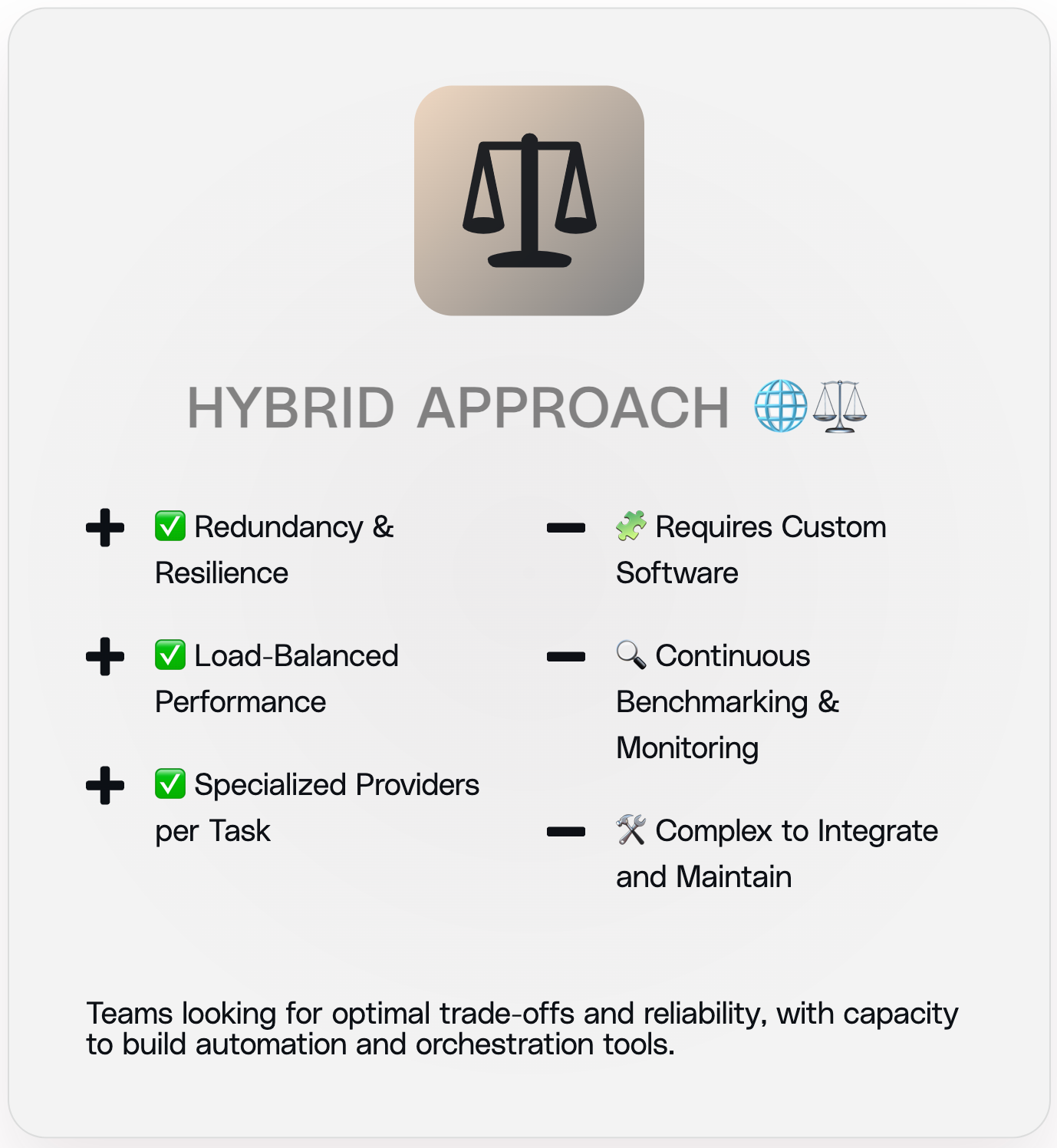

RPC Aggregation

This hybrid approach attempts to capture the best of both worlds by combining a number of managed services with specialised workloads, offering redundancy across multiple infrastructure types, load distribution based on query characteristics, and the ability to leverage specialized providers for specific tasks. However, these benefits come with operational overhead in coordinating black box providers. Teams are usually tasked with reinventing the wheel taking time away from their core value proposition.

Let's go through a study on RPC performance to demonstrate the problems and show why RPC aggregation is difficult.

Our Study: Quantifying RPC Performance

We conducted a brief study to show the current state of RPC infrastructure, assessing how well providers meet Pangea's indexing requirements, which is one of the toughest uses of blockchain data. We regularly assess major providers and dozens of boutique providers for performance and reliability. This study is part of our ongoing evaluations.

Test Configuration

The chain and providers used in this study have been chosen as representative of the different trade-offs we face and by no means comprehensive.

Chain: Sonic

Providers: Alchemy (free-tier), Ankr (free-tier), BlockPi (free-tier), CallStatic (enterprise), Tenderly (enterprise), DRPC (free-tier)

Tests:

- Historical backfill

- Real-time processing

- Toolbox synchronisation (complex query patterns)

Evaluation Methodology

We developed a weighted scoring system that adapts to different operational modes:

Metrics Collected:

- Block lag (% time behind, average blocks behind)

- Data throughput (requests per second × response size)

- Speed (average bytes per second)

Scoring Weights:

- Synchronisation Mode: 10% freshness, 40% throughput, 30% speed

- Real-time Mode: 50% freshness, 10% throughput, 30% speed

The Results: A Tale of Specialisation

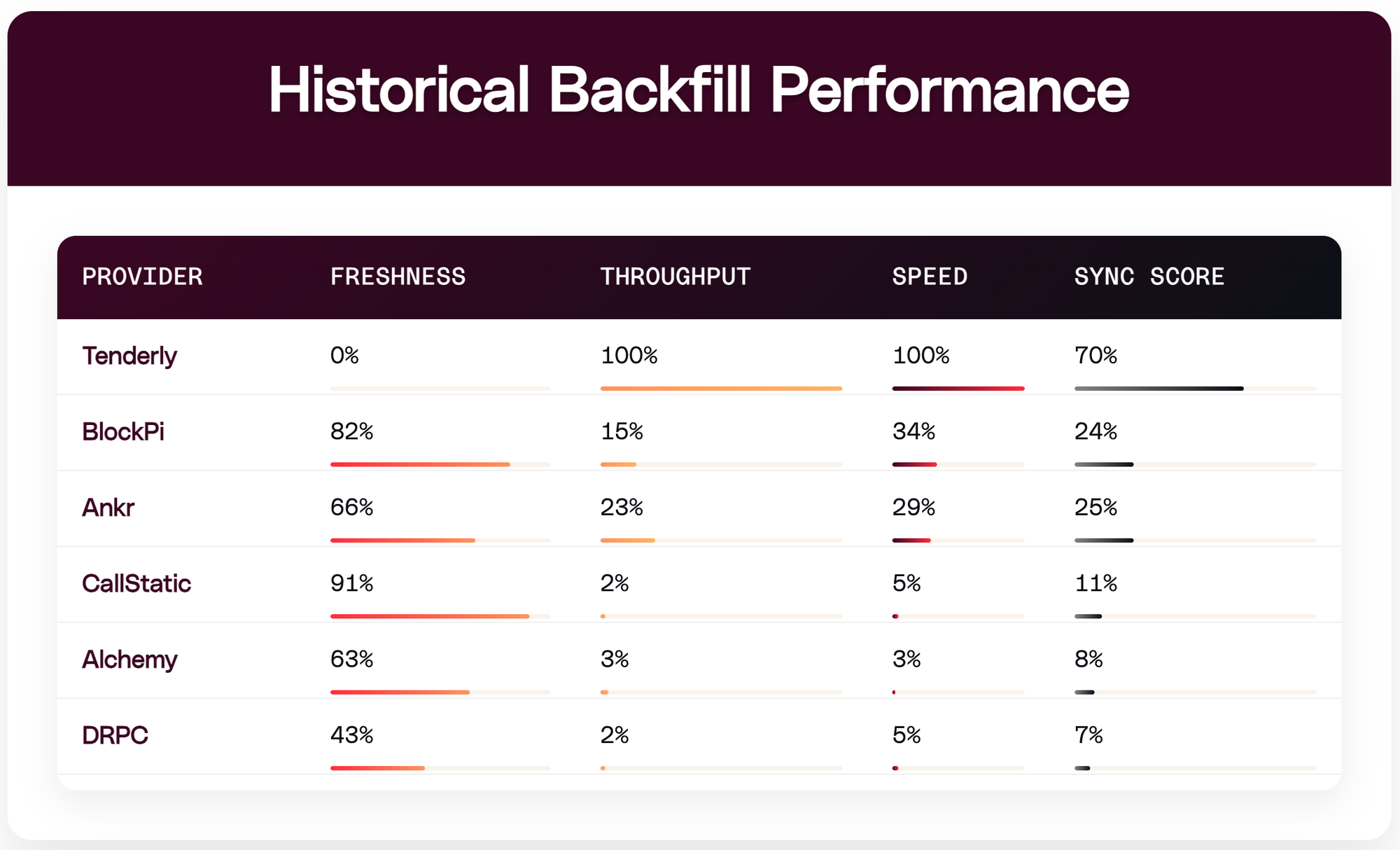

Test 1: Historical Backfill Performance

Winner: Tenderly - Despite zero freshness score, Tenderly's exceptional throughput and speed make it ideal for historical data synchronisation.

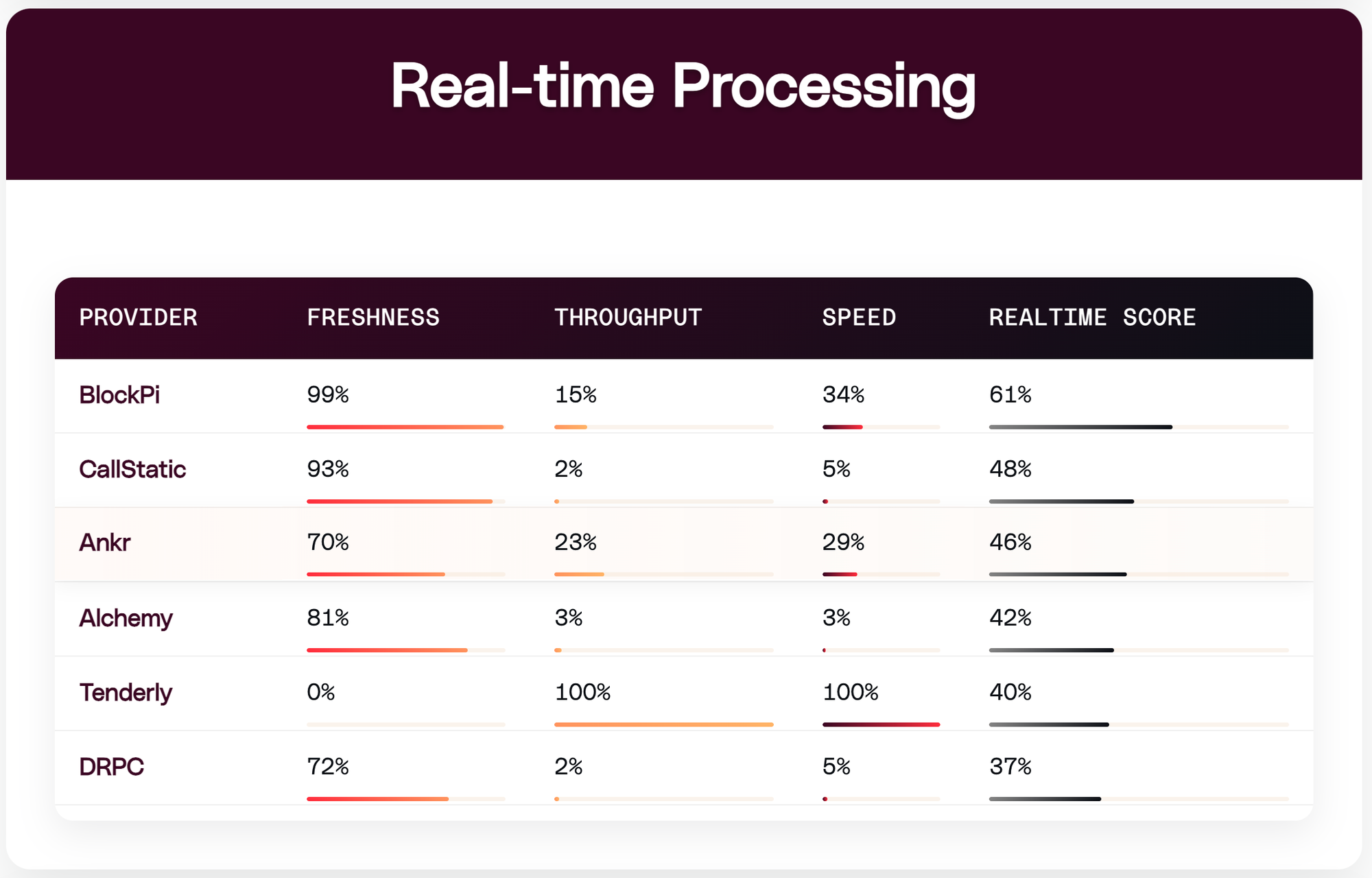

Test 2: Real-time Processing

Winner: BlockPi - Exceptional data freshness makes it the clear choice for real-time applications, with CallStatic as a strong alternative.

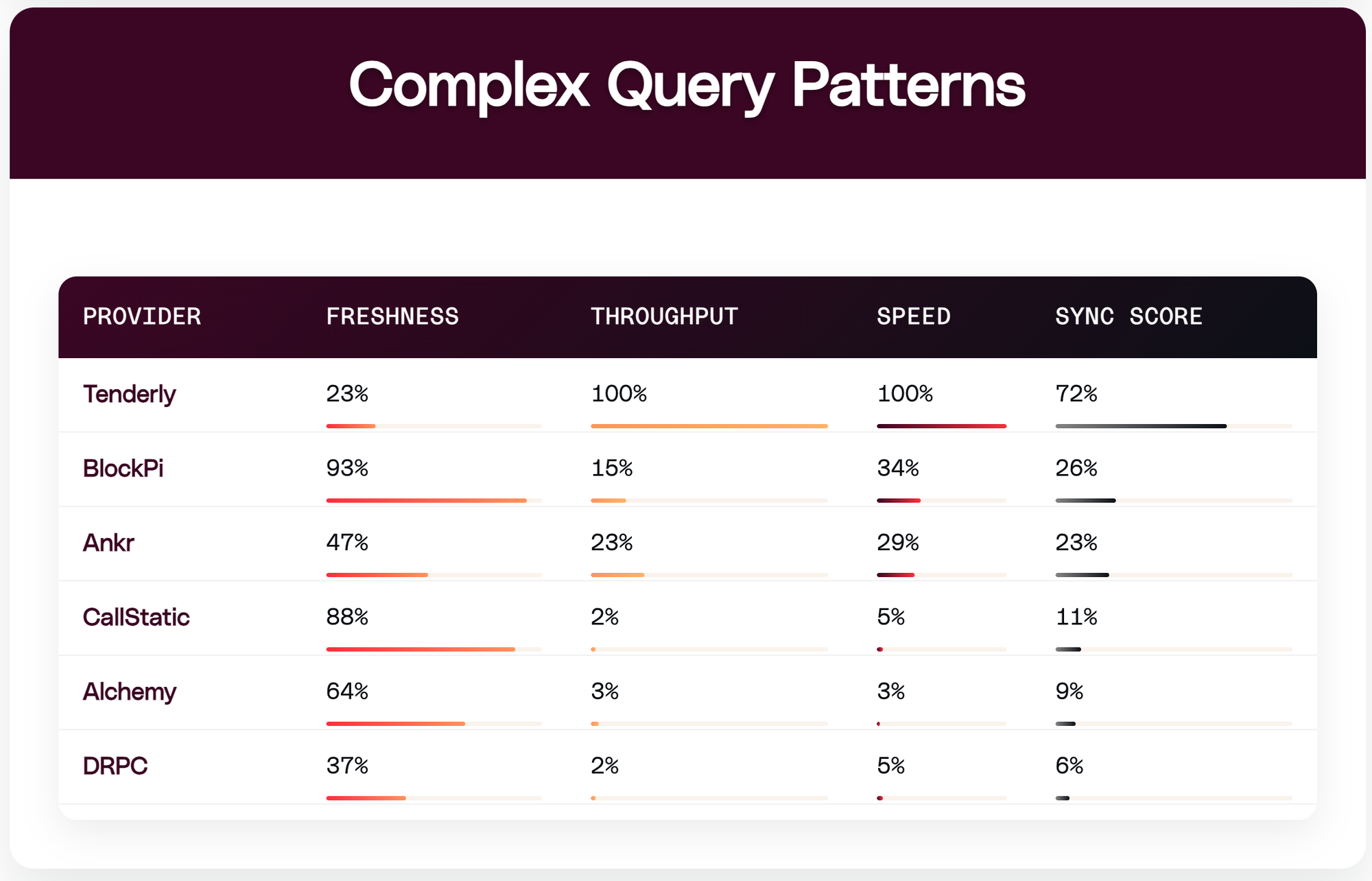

Test 3: Complex Query Patterns

Winner: Tenderly - Again dominates for complex synchronisation workloads.

The Infrastructure Dilemma

Our findings reveal a fundamental challenge in blockchain infrastructure:

The Free-Tier Trap

Free tiers are problematic for production use. Rate limits kick in at the worst times, and connecting a single provider account across multiple chains skews performance metrics. Provider A might be technically superior but appear worst due to hitting limits.

The PAYG Myth

Pay-as-you-go models are largely non-viable. The capital costs of running node infrastructure force providers to push subscriptions. Those offering PAYG typically deliver inferior performance.

The Specialisation Reality

No single provider excels at all use cases. Teams need:

- Tenderly for historical synchronisation

- BlockPi or CallStatic for real-time data

- Multiple fallbacks for reliability

Lessons from Running Production Infrastructure

Operating our own node fleet alongside external providers has taught us crucial lessons:

- VM-based infrastructure creates hidden bottlenecks

- Caching strategies conflict with real-time needs

- Rate limiting is often opaque

- Network topology significantly impacts performance

- Prepare for high load scenarios

This study represents our ongoing commitment to infrastructure transparency. As the blockchain ecosystem evolves, so too must our approach to the fundamental layers that make it all possible.

The Path Forward

The future of blockchain depends on solving these infrastructure challenges. Until we do, every project will continue to face the same hidden crisis: the infrastructure beneath our decentralised dreams remains frustratingly centralised and unreliable.

The solution isn't building yet another RPC provider, it's creating an intelligent orchestration layer that abstracts away the complexity of self hosting infrastructure entirely. Pangea Network represents this new paradigm: a unified platform that coordinates across multiple node operators and specialised service providers to deliver consistent, high-performance compute for aggregations across blockchain resources.

Rather than forcing teams to choose between self-hosted complexity or vendor lock-in, Pangea provides the orchestration layer that AI agents actually need. Our platform intelligently routes queries based on real-time performance metrics, automatically handles failover scenarios, and normalises responses across different providers. This enables teams to focus on building indexing services, analytics platforms, and AI workflows instead of wrestling with infrastructure.

By combining our optimised node fleet with external providers, we create an elastic infrastructure that scales dynamically with demand while maintaining high-quality service guarantees. This elastic approach ensures real-time data stays current even during traffic spikes, automatically provisioning additional capacity when AI agents require it. We publish transparent benchmarks demonstrating resource coordination at scale delivers the reliability and performance that AI agents demand.

The future of blockchain infrastructure isn't about choosing the right provider, it's about having an orchestration layer smart enough to make those decisions automatically.

Pangea Network is reshaping how decentralised applications access blockchain resources, delivering the quality of service AI agents and high-performance applications demand.

Ready to join the next revolution in blockchain infrastructure? We would like to invite nodes operators to apply for our devnet programme register your interest.

For developers wanting to get early access please join our community discord for updates.