Inside Reth: The journey of a transaction through Ethereum

Enjoy the article Inside Reth: The journey of a transaction through Ethereum by Andy Thomason.

Reth is an exciting new Ethereum execution client written in Rust from the same stable as the Foundry contract authoring tools. Rust gives both speed and stability to the client, as Rust is a strongly typed, compiled language with compile time and run time checks for undefined behaviour.

You can find out about Ethereum from the excellent documentation from the Ethereum Foundation. (See further reading below).

In this post we will set up a simple local network with Reth and follow the path of a transaction through the Ethereum ecosystem from submission to log queries in the Reth source code.

We will be referencing the Reth project on GitHub as we go. Reth is still in Alpha, but is already an excellent platform for writing chain analytics and experimenting with protocols and file formats, which is our focus at Pangea.

Setting up Reth

For our example, we are going to build and run Reth from the source code. This will enable us to use traces to show the progress of our transaction.

First, clone Reth and install Rust and Foundry (instructions for Unix).

# Install Rustup

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

rustup

# Install Foundry

curl -L https://foundry.paradigm.xyz | bash

foundry

# Clone and build Reth

git clone https://github.com/paradigmxyz/reth.git

cd reth

cargo install --path reth/bin --debugNow we can run Reth which has a number of commands, but before we do this, we are going to create a JSON genesis file for our pretend Ethereum network:

{

"nonce": "0x42",

"timestamp": "0x0",

"extraData": "0x5343",

"gasLimit": "0x1388",

"difficulty": "0x400000000",

"mixHash": "0x0000000000000000000000000000000000000000000000000000000000000000",

"coinbase": "0x0000000000000000000000000000000000000000",

"alloc": {

"0xd143C405751162d0F96bEE2eB5eb9C61882a736E": {

"balance": "0x4a47e3c12448f4ad000000"

},

"0x944fDcD1c868E3cC566C78023CcB38A32cDA836E": {

"balance": "0x4a47e3c12448f4ad000000"

}

},

"number": "0x0",

"gasUsed": "0x0",

"parentHash": "0x0000000000000000000000000000000000000000000000000000000000000000",

"config": {

"ethash": {},

"chainId": 12345,

"homesteadBlock": 0,

"eip150Block": 0,

"eip155Block": 0,

"eip158Block": 0,

"byzantiumBlock": 0,

"constantinopleBlock": 0,

"petersburgBlock": 0,

"istanbulBlock": 0,

"berlinBlock": 0,

"londonBlock": 0,

"terminalTotalDifficulty": 0,

"terminalTotalDifficultyPassed": true,

"shanghaiTime": 0

}

}chain.json

This sets us up with a chain 12345 with two accounts. Our goal is to transfer some Pseudo-Eth from one account to another. We will use the Shanghai hard fork for all our blocks.

Now we can run Reth using our chain spec. In this example we will not use a Beacon client like Lighthouse as ours is only a small local chain, instead will use the --auto-mine option to mine blocks as soon as we send transactions in a similar manner to the Anvil tool in Forge. We disable the peer-to-peer network with -d and use --http to enable the JSON-RPC interface.

rm -rf /tmp/screth

RUST_LOG="info" reth node -d --chain chain.json \

--datadir /tmp/screth --auto-mine --httpIf all goes well, we should get some logs:

2023-08-02T11:59:08.165187Z INFO reth::cli: reth 0.1.0-alpha.4 (dee14c7b) starting

2023-08-02T11:59:08.177377Z INFO reth::cli: Configuration loaded path="/tmp/screth/reth.toml"

2023-08-02T11:59:08.177494Z INFO reth::cli: Opening database path="/tmp/screth/db"

2023-08-02T11:59:08.184772Z INFO reth::cli: Database opened

2023-08-02T11:59:08.186710Z INFO reth::cli: Pre-merge hard forks (block based):

...Now, in another terminal, we can use the cast tool to send a transaction which will transfer one Pseudo-Wei from one account to another.

cast send \

--from 0xd143C405751162d0F96bEE2eB5eb9C61882a736E \

--value 1 --legacy --private-key \

0xcece4f25ac74deb1468965160c7185e07dff413f23fcadb611b05ca37ab0a52e \

0x944fDcD1c868E3cC566C78023CcB38A32cDA836EWe can check the balance of the destination account before and after the transaction. This should show and increase of one per transaction sent.

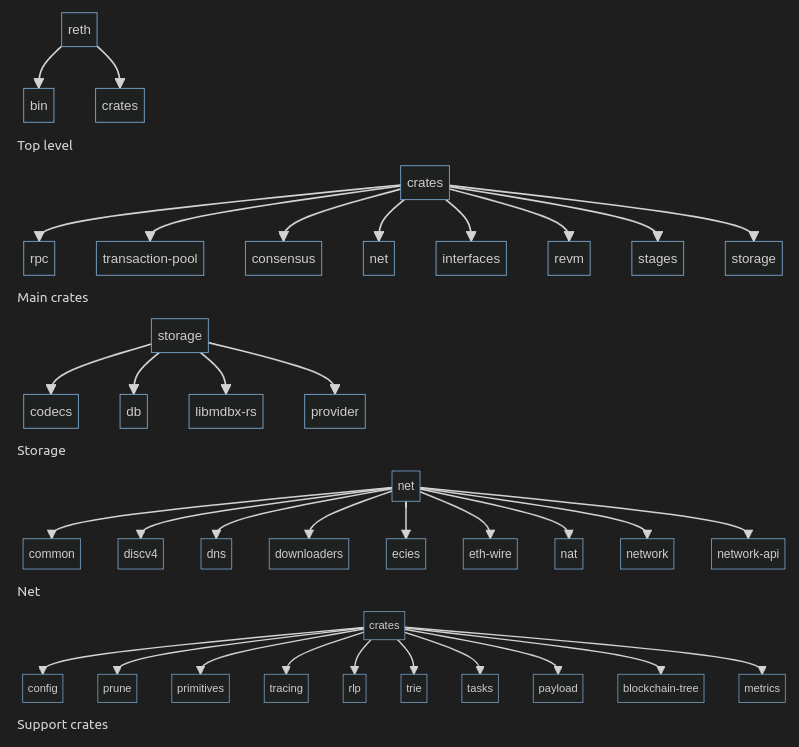

cast balance 0x944fDcD1c868E3cC566C78023CcB38A32cDA836ENow we deep-dive into the code to see how this all works. There is a summary of the project layout here:

https://github.com/paradigmxyz/reth/blob/main/docs/repo/layout.md

Here is a diagram of the layout.

Now we are ready to see how our transaction is processed in Reth.

Step 1: JSON-RPC

The journey of our transaction starts and ends in the rpc crate. When we run the cast send command, cast uses HTTP on localhost:8545 to interact with the chain.

We can trace the rpc crate using RUST_LOG="rpc::eth=trace" which will give the JSON-RPC calls that cast send executes.

(abbreviated)

TRACE rpc::eth: Serving eth_chainId

TRACE rpc::eth: Serving eth_getTransactionCount

TRACE rpc::eth: Serving eth_gasPrice

TRACE rpc::eth: Serving eth_estimateGas

TRACE rpc::eth: Serving eth_sendRawTransaction

TRACE rpc::eth: Serving eth_getTransactionReceipt

TRACE rpc::eth: Serving eth_getTransactionByHash

TRACE rpc::eth: Serving eth_getTransactionReceiptEach of the calls is handled in eth/api/server.rs. For example, eth_getTransactionCount is handled here:

/// Handler for: `eth_getTransactionCount`

async fn transaction_count(

&self,

address: Address,

block_number: Option<BlockId>,

) -> Result<U256> {

trace!(target: "rpc::eth", ?address, ?block_number,

"Serving eth_getTransactionCount");

Ok(self

.on_blocking_task(

|this| async move {

this.get_transaction_count(address, block_number)

},

)

.await?)

}The API environment that this runs in consists of a Provider, a Pool and a Network. If we specify "pending" as the block number, this call will first look in the pool for any pending transactions, otherwise we look in the provider, which provides a standardised view of the state database to get the nonce of the account in question. We can trace the provider calls with RUST_LOG="providers=trace".

When we send the signed transaction to the client, we use this handler:

/// Handler for: `eth_sendRawTransaction`

async fn send_raw_transaction(&self, tx: Bytes) -> Result<H256> {

trace!(target: "rpc::eth", ?tx, "Serving eth_sendRawTransaction");

Ok(EthTransactions::send_raw_transaction(self, tx).await?)

}

This uses ECDSA recovery to get the sender from the v, r and s fields of the transaction and then adds the transaction to the transaction pool. Like the Provider, the transaction pool is abstracted through a trait in the transaction_pool crate which could allow for alternative pool implementations.

Step 2: Network

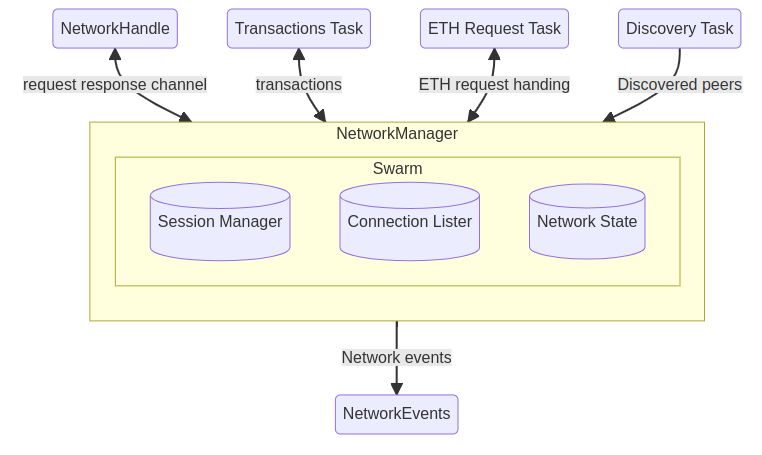

Once we send a transaction to the transaction pool, it will be distributed to other network nodes. We only have one node in our demo case, so this is not necessary. The crates in the network directory handle communications with peers in the network. There is excellent developer documentation for the network crates here:

https://github.com/paradigmxyz/reth/blob/main/docs/crates/network.md

Transaction propagation starts with a call to on_new_transactions() in transactions.rs. This sends either a full transaction or a transaction hash to all connected peers. See:

https://paradigmxyz.github.io/reth/docs/reth_eth_wire/types/broadcast/index.html

The TransactionManager handles incoming transaction messages, adding transactions to the pools of peers as required.

Now our transaction has been distributed to the entire network and we are ready for mining.

Step 3: Mining

In this example, we are using the --auto-mine option to mine blocks in our one-client network. But if we were running on Mainnet, for example, we would also be running a consensus client such as Lighthouse. There are three crates in the consensus directory. auto-seal, beacon and common. auto-seal is the one we are using for the demo and beacon would be the one you use if we are running Lighthouse.

It is the job of the consensus client to propose blocks, broadcast them to other consensus clients and validate the blocks received. One proposer is picked and many validators check the result.

When operating, the consensus client uses the engine_* API calls to interact with the execution client.

The engine_* endpoints are normally served by HTTP on port 8551

In Reth these endpoints are handled in rpc/rpc-engine-api for example:

/// Handler for `engine_forkchoiceUpdatedV2`

async fn fork_choice_updated_v2(

&self,

fork_choice_state: ForkchoiceState,

payload_attributes: Option<PayloadAttributes>,

) -> RpcResult<ForkchoiceUpdated> {

trace!(target: "rpc::engine",

"Serving engine_forkchoiceUpdatedV2");

Ok(EngineApi::fork_choice_updated_v2(

self, fork_choice_state, payload_attributes).await?

)

}This sends BeaconEngineMessage::ForkchoiceUpdated event to the engine task in consensus/beacon/src/engine and this in turn adds the mined block to the chain. In the case of a contested block - a re-org - we may need to revise the idea of our canonical chain. Much of this logic lives in the blockchain-tree crate.

In this context, a Payload is a set of transactions and parameters required to define a new block in the chain. In the case of --auto-mine this message is sent internally when a transaction is submitted to the transaction pool and we don't need a consensus client to mine the block.

To validate the block a number of checks have to be made. If you are stuck in a log cabin in the mountains for a few weeks, you may want to read through all the proof of stake EIPS! Indeed, the Reth authors must be congratulated on their perseverance.

Step 4: Execution

When a block is added to the chain, we must update the state of the blockchain by executing the transactions in the latest block.

Reth uses Dragan Rakita's excellent REVM crate which encapsulates the execution side of Ethereum. REVM is based on the Ethereum organisation's execution-specs crate which features a complete Python implementation for each of the hardforks of Ethereum.

The REVM EVM object supports two calls to execute a transaction: transact which takes a transaction and context and returns a list of updated account values and receipts and inspect which also takes an Inspector.

The inspectors used in Reth live in crates/revm/revm-inspectors and include a variety of different inspectors ranging from a collector of four byte signatures to a full Javascript inplementation using boa. Inspectors also support the Geth tracing APIs.

We must also pay block mining fees to miners and apply the famous 'DAO fork' which refunded a number of accounts after a heist.

Inspectors are a great way to customise execution, for example by adding 'pre-compiles' or contracts written in Rust.

Step 5: Database

Once executed, receipts (including logs) and historical state changes are added to the database and the state root is updated for that block.

The database access is exposed through the traits defined in crates/storage/provider. These include readers and writers of various kinds for accounts, blocks, canonical chain, transactions, receipts and other things.

As an example, here is the storage reader trait:

/// Storage reader

#[auto_impl(&, Arc, Box)]

pub trait StorageReader: Send + Sync {

/// Get plainstate storages for addresses and storage keys.

fn plainstate_storages(

&self,

addresses_with_keys: impl IntoIterator<Item =

(Address, impl IntoIterator<Item = H256>)>,

) -> Result<Vec<(Address, Vec<StorageEntry>)>>;

/// Iterate over storage changesets and return all storage

/// slots that were changed.

fn changed_storages_with_range(

&self,

range: RangeInclusive<BlockNumber>,

) -> Result<BTreeMap<Address, BTreeSet<H256>>>;

/// Iterate over storage changesets and return all storage

/// slots that were changed alongside each specific set of blocks.

///

/// NOTE: Get inclusive range of blocks.

fn changed_storages_and_blocks_with_range(

&self,

range: RangeInclusive<BlockNumber>,

) -> Result<BTreeMap<(Address, H256), Vec<u64>>>;

}The current database implementation is based on the MDBX memory mapped B+ tree key-value data store. Reth provides a wrapper for the C code as well as a minimal Rust layer all contained in the project.

When Reth is stopped, you can dump stats and data from the database using the reth db command. This is a good way of learning the database and its layout. Provider itself can be used outside of Reth and there is an example in examples/db-access.rs

Step 6: RPC Access.

Finally we return to RPC to examine the state of the chain. When we run:

cast balance 0x944fDcD1c868E3cC566C78023CcB38A32cDA836EWe go back into the rpc module:

/// Handler for: `eth_getBalance`

async fn balance(&self, address: Address,

block_number: Option<BlockId>) -> Result<U256> {

trace!(target: "rpc::eth", ?address, ?block_number,

"Serving eth_getBalance");

Ok(self.on_blocking_task(|this| async move

{ this.balance(address, block_number) }).await?)

}

The account balance comes from StateProvider using AccountReader to fetch basic account information.

Finally

Finally the journey of a transaction through Reth and back out of the chain is complete. We have covered only a small part of the Reth architecture and although it is early days yet, we see Reth being an important part of our mission at Pangea to decentralise data.

We're always on the lookout for talent

If you liked this article and want to work on decentralising blockchain data with ground breaking technologies in Rust please DM us: https://x.com/In_Pangea.

Further reading